Seeing Time

If you can see time, you can think with it.

And if you can think with it, humans and machine partners can actually work together.

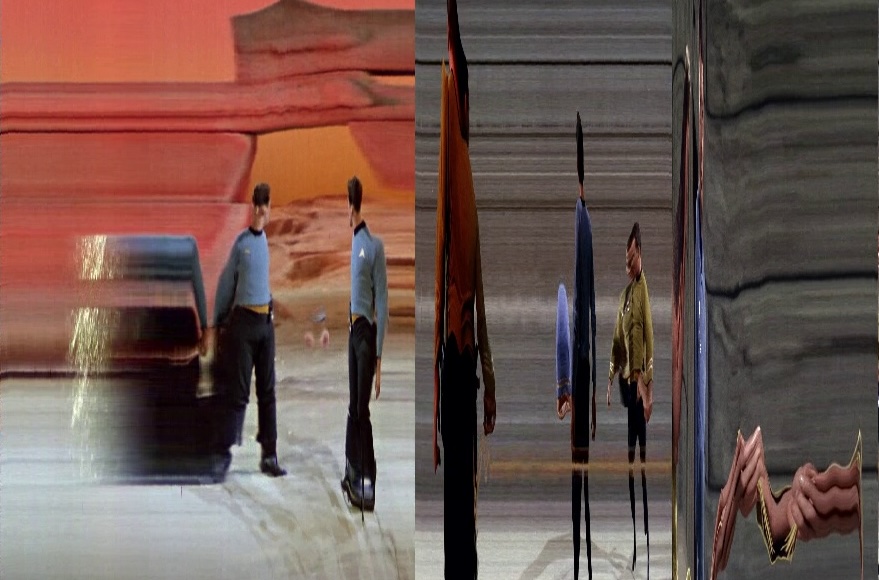

At HMMC (Human–Machine–Mind Corporation), we treat time not just as a clock but as something you can see, browse, and compose—a structural element that makes complex worlds legible. Our early work in film analysis asked a simple question: can you see the rhythm of a movie the way you hear a melody? That inquiry led to movie maps: visual index systems that turn linear streams into layered, navigable representations. The result isn’t just a nicer timeline; it’s a new way to think with time—skip links into causality, patterns across scenes, and structural motifs you feel before you read.

Why “seeing” time matters

Humans reason with stories; machines reason with states. Real projects—clinical pathways, mixed-reality operations, cultural-heritage pipelines—need a shared temporal map:

- For people: make long processes legible—what happened, where we are, what comes next.

- For machines: expose structure—events, transitions, dependencies—so agents can plan, explain, and be audited.

- For teams: enable replay, compare, branch, and remix—the time affordances of non-linear editing, brought to everyday decision-making.

When time becomes visible, confidence and control improve. You don’t just wait for outcomes—you navigate them.

Four lenses for time

We organize temporal information in four synchronized layers—each answering a different question:

Physical — Where is it and how is it stored?

Files, formats, versions, provenance. Foundations for audit & reproducibility.Image/Signal — What do raw signals say over time?

Histograms, sonograms, motion fields—compact “signatures” across minutes or hours.Object/Entity — Who/what appears, and when?

Characters, instruments, locations—aligned to the timeline for “when-did-what-happen?” queries.Discourse/Narrative — What does it mean in context?

Plot vs. story, plan vs. execution, hypothesis vs. evidence—relations (before/after, cause/effect) and multi-perspective re-tellings.

In film, these layers powered interactive “movie books” that let you enter a feature at structural points—by character, location, musical theme, or narrative beat. In operations, the same idea becomes a process book and a process browser.

A practical primitive: OM-Images

A durable trick from our movie-mapping days: OM-Images (Objects + Movements).

- O-image: what persists (appearance).

- M-image: what changes (motion/tempo).

Together they compress an hour into a glance—structure, rhythm, tempo—without losing the beats that matter. Today we apply OM-Images to microscope scans, user sessions, sensor logs, and multi-agent runbooks.

From cinema to systems: what HMMC builds

We design interfaces that treat time as a first-class citizen:

- Zoom time like a map—from months to milliseconds—keeping landmarks aligned across layers.

- Branch & compare futures, reconcile paths, and keep reversible edits.

- Explain & audit: tie every recommendation to temporal evidence—“why now,” “what if earlier,” “what changes if we wait.”

- Compose narratives: generate regulator, clinic, and user-study views from the same timeline without re-authoring facts.

Under the hood, our systems keep versioned temporal provenance (Physical), feature signatures (Image), entity timelines (Object), and narrative graphs (Discourse) in lockstep—so humans and agents always point at the same moment when they say “this.”

Design principles for temporal UX

- Make structure glanceable. Surface beats, scenes, and phase boundaries first.

- Layer, don’t drown. Peel from signal → entities → narrative (progressive disclosure).

- Expose uncertainty in time. Show gaps, lateness, and confidence bands.

- Keep edits reversible. Branching and rollback are core time affordances.

- Sync language and visuals. Every claim (“spike,” “delay,” “handoff”) gets a visible anchor.

References & Downloads

Research Papers

H. Müller and E. Tan, “Movie maps,” 1999 IEEE International Conference on Information Visualization (IV'99), London, UK, pp. 348–353. doi: 10.1109/IV.1999.781581. Keywords: Image sequence analysis.

Download: movieMaps.pdfH. Müller-Seelich & E. S. Tan (2000). “Visualizing the Semantic Structure of Film and Video.” In Visual Data Exploration and Analysis VII (SPIE).

HMMC (Human–Machine–Mind Corporation) builds human–AI interfaces and workflows with time, provenance, and trust as first-class design elements.